- Automated Web Scraping Tools Download

- Automated Web Scraping Tools Free

- Web Scraping Python

- Web Scrapers

- Automated Web Scraping Tool

- Best Web Scraping Tools

When it comes to the world wide web there are both bad bots and good bots. The bad bots you definitely want to avoid as these consume your CDN bandwidth, take up server resources, and steal your content. Good bots (also known as web crawlers) on the other hand, should be handled with care as they are a vital part of getting your content to index with search engines such as Google, Bing, and Yahoo. Read more below about some of the top 10 web crawlers and user agents to ensure you are handling them correctly.

Web crawlers

- Octoparse is a trusted price scraping tool for those who want to easily and quickly extract web data without any coding. Just follow three easy steps – point, click and extract- and turn web pages into structured spreadsheets with a few clicks! When it comes to price scraping, Octoparse.

- Amazon Web Data Extraction: Outsource Bigdata offers comprehensive Amazon scraping tools and data solutions. After our Amazon scraping tool crawls the website, we cleanse, merge, enrich, classify, format, analyse and provide reports of the data. You can take advantage of the ready-to-consume data to fuel your business and marketing strategy.

- Aug 06, 2019 Web scraping refers to the extraction of data from a website. This information is collected and then exported into a format that is more useful for the user. Be it a spreadsheet or an API. Although web scraping can be done manually, in most cases, automated tools are preferred when scraping web data as they can be less costly and work at a.

Web crawlers, also known as web spiders or internet bots, are programs that browse the web in an automated manner for the purpose of indexing content. Crawlers can look at all sorts of data such as content, links on a page, broken links, sitemaps, and HTML code validation.

Search engines like Google, Bing, and Yahoo use crawlers to properly index downloaded pages so that users can find them faster and more efficiently when they are searching. Without web crawlers, there would be nothing to tell them that your website has new and fresh content. Sitemaps also can play a part in that process. So web crawlers, for the most part, are a good thing. However there are also issues sometimes when it comes to scheduling and load as a crawler might be constantly polling your site. And this is where a robots.txt file comes into play. This file can help control the crawl traffic and ensure that it doesn't overwhelm your server.

Automated Web Scraping Tools. For someone who is looking for a quick tool to scrape data off pages to Excel and doesn’t want to set up the VBA code yourself, I strongly recommend automated web scraping tools like Octoparse to scrape data for your Excel Worksheet directly or via API. There is no need to learn to program.

Web crawlers identify themselves to a web server by using the User-Agent request header in an HTTP request, and each crawler has their own unique identifier. Most of the time you will need to examine your web server referrer logs to view web crawler traffic.

Robots.txt

By placing a robots.txt file at the root of your web server you can define rules for web crawlers, such as allow or disallow certain assets from being crawled. Web crawlers must follow the rules defined in this file. You can apply generic rules which apply to all bots or get more granular and specify their specific User-Agent string.

Example 1

This example instructs all Search engine robots to not index any of the website's content. This is defined by disallowing the root / of your website.

Example 2

This example achieves the opposite of the previous one. In this case, the instructions are still applied to all user agents, however there is nothing defined within the Disallow instruction, meaning that everything can be indexed.

To see more examples make sure to check out our in-depth post on how to use a robots.txt file.

Top 10 web crawlers and bots

There are hundreds of web crawlers and bots scouring the internet but below is a list of 10 popular web crawlers and bots that we have been collected based on ones that we see on a regular basis within our web server logs.

1. GoogleBot

Googlebot is obviously one of the most popular web crawlers on the internet today as it is used to index content for Google's search engine. Patrick Sexton wrote a great article about what a Googlebot is and how it pertains to your website indexing. One great thing about Google's web crawler is that they give us a lot of tools and control over the process.

User-Agent

Full User-Agent string

Googlebot example in robots.txt

This example displays a little more granularity pertaining to the instructions defined. Here, the instructions are only relevant to Googlebot. More specifically, it is telling Google not to index a specific page (/no-index/your-page.html).

Besides Google's web search crawler, they actually have 9 additional web crawlers:

| Web crawler | User-Agent string |

|---|---|

| Googlebot News | Googlebot-News |

| Googlebot Images | Googlebot-Image/1.0 |

| Googlebot Video | Googlebot-Video/1.0 |

| Google Mobile (featured phone) | SAMSUNG-SGH-E250/1.0 Profile/MIDP-2.0 Configuration/CLDC-1.1 UP.Browser/6.2.3.3.c.1.101 (GUI) MMP/2.0 (compatible; Googlebot-Mobile/2.1; +http://www.google.com/bot.html) |

| Google Smartphone | Mozilla/5.0 (Linux; Android 6.0.1; Nexus 5X Build/MMB29P) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/41.0.2272.96 Mobile Safari/537.36 (compatible; Googlebot/2.1; +http://www.google.com/bot.html) |

| Google Mobile Adsense | (compatible; Mediapartners-Google/2.1; +http://www.google.com/bot.html) |

| Google Adsense | Mediapartners-Google |

| Google AdsBot (PPC landing page quality) | AdsBot-Google (+http://www.google.com/adsbot.html) |

| Google app crawler (fetch resources for mobile) | AdsBot-Google-Mobile-Apps |

You can use the Fetch tool in Google Search Console to test how Google crawls or renders a URL on your site. See whether Googlebot can access a page on your site, how it renders the page, and whether any page resources (such as images or scripts) are blocked to Googlebot.

You can also see the Googlebot crawl stats per day, the amount of kilobytes downloaded, and time spent downloading a page.

See Googlebot robots.txt documentation.

2. Bingbot

Bingbot is a web crawler deployed by Microsoft in 2010 to supply information to their Bing search engine. This is the replacement of what used to be the MSN bot.

User-Agent

Full User-Agent string

Bing also has a very similar tool as Google, called Fetch as Bingbot, within Bing Webmaster Tools. Fetch As Bingbot allows you to request a page be crawled and shown to you as our crawler would see it. You will see the page code as Bingbot would see it, helping you to understand if they are seeing your page as you intended.

See Bingbot robots.txt documentation.

3. Slurp Bot

Yahoo Search results come from the Yahoo web crawler Slurp and Bing's web crawler, as a lot of Yahoo is now powered by Bing. Sites should allow Yahoo Slurp access in order to appear in Yahoo Mobile Search results.

Additionally, Slurp does the following:

- Collects content from partner sites for inclusion within sites like Yahoo News, Yahoo Finance and Yahoo Sports.

- Accesses pages from sites across the Web to confirm accuracy and improve Yahoo's personalized content for our users.

User-Agent

Full User-Agent string

See Slurp robots.txt documentation.

4. DuckDuckBot

DuckDuckBot is the Web crawler for DuckDuckGo, a search engine that has become quite popular lately as it is known for privacy and not tracking you. It now handles over 12 million queries per day. DuckDuckGo gets its results from over four hundred sources. These include hundreds of vertical sources delivering niche Instant Answers, DuckDuckBot (their crawler) and crowd-sourced sites (Wikipedia). They also have more traditional links in the search results, which they source from Yahoo!, Yandex and Bing.

User-Agent

Full User-Agent string

It respects WWW::RobotRules and originates from these IP addresses:

- 72.94.249.34

- 72.94.249.35

- 72.94.249.36

- 72.94.249.37

- 72.94.249.38

5. Baiduspider

Baiduspider is the official name of the Chinese Baidu search engine's web crawling spider. It crawls web pages and returns updates to the Baidu index. Baidu is the leading Chinese search engine that takes an 80% share of the overall search engine market of China Mainland.

User-Agent

Full User-Agent string

Besides Baidu's web search crawler, they actually have 6 additional web crawlers:

| Web crawler | User-Agent string |

|---|---|

| Image Search | Baiduspider-image |

| Video Search | Baiduspider-video |

| News Search | Baiduspider-news |

| Baidu wishlists | Baiduspider-favo |

| Baidu Union | Baiduspider-cpro |

| Business Search | Baiduspider-ads |

| Other search pages | Baiduspider |

See Baidu robots.txt documentation.

6. Yandex Bot

YandexBot is the web crawler to one of the largest Russian search engines, Yandex. According to LiveInternet, for the three months ended December 31, 2015, they generated 57.3% of all search traffic in Russia.

User-Agent

Full User-Agent string

There are many different User-Agent strings that the YandexBot can show up as in your server logs. See the full list of Yandex robots and Yandex robots.txt documentation.

7. Sogou Spider

Sogou Spider is the web crawler for Sogou.com, a leading Chinese search engine that was launched in 2004. As of April 2016 it has a rank of 103 in Alexa's internet rankings.

User-Agent

8. Exabot

Exabot is a web crawler for Exalead, which is a search engine based out of France. It was founded in 2000 and now has more than 16 billion pages currently indexed.

User-Agent

See Exabot robots.txt documentation.

9. Facebook external hit

Facebook allows its users to send links to interesting web content to other Facebook users. Part of how this works on the Facebook system involves the temporary display of certain images or details related to the web content, such as the title of the webpage or the embed tag of a video. The Facebook system retrieves this information only after a user provides a link.

One of their main crawling bots is Facebot, which is designed to help improve advertising performance.

User-Agent

See Facebot robots.txt documentation.

10. Alexa crawler

ia_archiver is the web crawler for Amazon's Alexa internet rankings. As you probably know they collect information to show rankings for both local and international sites.

User-Agent

Automated Web Scraping Tools Download

Full User-Agent string

See Ia_archiver robots.txt documentation.

Bad bots

As we mentioned above most of those are actually good web crawlers. You generally don't want to block Google or Bing from indexing your site unless you have a good reason. But what about the thousands of bad bots? KeyCDN released a new feature back in February 2016 that you can enable in your dashboard called Block Bad Bots. KeyCDN uses a comprehensive list of known bad bots and blocks them based on their User-Agent string.

When a new Zone is added the Block Bad Bots feature is set to disabled. This setting can be set to enabled instead if you want bad bots to automatically be blocked.

Bot resources

Perhaps you are seeing some user-agent strings in your logs that have you concerned. Here are a couple of good resources in which you can lookup popular bad bots, crawlers, and scrapers.

Caio Almeida also has a pretty good list on his crawler-user-agents GitHub project.

Summary

There are hundreds of different web crawlers out there but hopefully you are now familiar with couple of the more popular ones. Again you want to be careful when blocking any of these as they could cause indexing issues. It is always good to check your web server logs to see how often they are actually crawling your site.

Did we miss any important ones? If so please let us know below and we will add them.

Saturday, February 01, 2020You probably know how to use basic functions in Excel. It’s easy to do things like sorting, applying filters, making charts, and outlining data with Excel. You even can perform advanced data analysis using pivot and regression models. It becomes an easy job when the live data turns into a structured format. The problem is, how can we extract scalable data and put it into Excel? This can be tedious if you doing it manually by typing, searching, copying and pasting repetitively. Instead, you can achieve automated data scraping from websites to excel.

In this article, I will introduce several ways to save your time and energy to scrape web data into Excel.

Disclaimer:

There many other ways to scrape from websites using programming languages like PHP, Python, Perl, Ruby and etc. Here we just talk about how to scrape data from websites into excel for non-coders.

Getting web data using Excel Web Queries

Except for transforming data from a web page manually by copying and pasting, Excel Web Queries is used to quickly retrieve data from a standard web page into an Excel worksheet. It can automatically detect tables embedded in the web page's HTML. Excel Web queries can also be used in situations where a standard ODBC(Open Database Connectivity) connection gets hard to create or maintain. You can directly scrape a table from any website using Excel Web Queries.

Automated Web Scraping Tools Free

The process boils down to several simple steps (Check out this article):

1. Go to Data > Get External Data > From Web

2. A browser window named “New Web Query” will appear

3. In the address bar, write the web address

Web Scraping Python

(picture from excel-university.com)

4. The page will load and will show yellow icons against data/tables.

5. Select the appropriate one

6. Press the Import button.

Now you have the web data scraped into the Excel Worksheet - perfectly arranged in rows and columns as you like.

Getting web data using Excel VBA

Most of us would use formula's in Excel(e.g. =avg(...), =sum(...), =if(...), etc.) a lot, but less familiar with the built-in language - Visual Basic for Application a.k.a VBA. It’s commonly known as “Macros” and such Excel files are saved as a **.xlsm. Before using it, you need to first enable the Developer tab in the ribbon (right click File -> Customize Ribbon -> check Developer tab). Then set up your layout. In this developer interface, you can write VBA code attached to various events. Click HERE (https://msdn.microsoft.com/en-us/library/office/ee814737(v=office.14).aspx) to getting started with VBA in excel 2010.

Web Scrapers

Using Excel VBA is going to be a bit technical - this is not very friendly for non-programmers among us. VBA works by running macros, step-by-step procedures written in Excel Visual Basic. To scrape data from websites to Excel using VBA, we need to build or get some VBA script to send some requests to web pages and get returned data from these web pages. It’s common to use VBA with XMLHTTP and regular expressions to parse the web pages. For Windows, you can use VBA with WinHTTP or InternetExplorer to scrape data from websites to Excel.

With some patience and some practice, you would find it worthwhile to learn some Excel VBA code and some HTML knowledge to make your web scraping into Excel much easier and more efficient for automating the repetitive work. There’s a plentiful amount of material and forums for you to learn how to write VBA code.

Automated Web Scraping Tools

Automated Web Scraping Tool

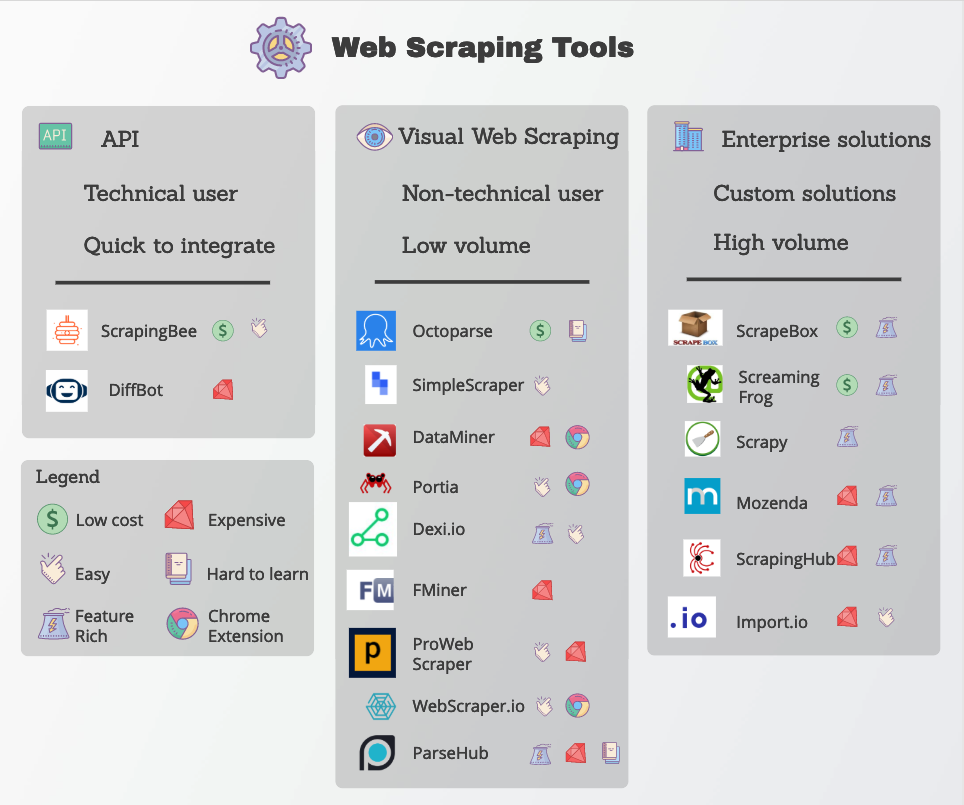

For someone who is looking for a quick tool to scrape data off pages to Excel and doesn’t want to set up the VBA code yourself, I strongly recommend automated web scraping tools like Octoparse to scrape data for your Excel Worksheet directly or via API. There is no need to learn to program. You can pick one of those web scraping freeware from the list, and get started with extracting data from websites immediately and exporting the scraped data into Excel. Different web scraping tool has its pros and cons and you can choose the perfect one to fit your needs. The below video shows how to leverage an automated web scraping tool to extract web data to excel efficiently.

Best Web Scraping Tools

Check out this post and try out these TOP 30 free web scraping tools

Outsource Your Web Scraping Project

If time is your most valuable asset and you want to focus on your core businesses, outsourcing such complicated web scraping work to a proficient web scraping team that has experience and expertise would be the best option. It’s difficult to scrape data from websites due to the fact that the presence of anti-scraping bots will restrain the practice of web scraping. A proficient web scraping team would help you get data from websites in a proper way and deliver structured data to you in an Excel sheet, or in any format you need.

Read Latest Customer Stories: How Web Scraping Helps Business of All Sizes

日本語記事:Webデータを活用!WebサイトからデータをExcelに取り込む方法

Webスクレイピングについての記事は 公式サイトでも読むことができます。

Artículo en español: Scraping de Datos del Sitio Web a Excel

También puede leer artículos de web scraping en el Website Oficial